Let's say you have a damaged, unmountable btrfs filesystem. You run btrfs check which tell you about some problems, but the documentation strongly advises you not to run btrfs check --repair.

WARNING: the repair mode is considered dangerous and should not be used without prior analysis of problems found on the filesystem.While btrfs check tells you the problems it finds, it does not tell you what changes it would make. It would be great if "repair" had a mode that would tell you what changes it would make, without committing yourself to those changes. Sadly there is no such mode - so what options do we have?

Brute force?

The brute force approach is to back up the devices comprising the damaged btrfs filesystem to another location, so that they could be restored if btrfs check --repair doesn't work out. This could be a lot of data to backup and restore, especially if the filesystem consists of multiple volumes comprising multiple terabytes of data.

What about...

Instead of brute force we could use virtualised discs and a hypervisor. Qemu's qcow2 disc format lets us set up virtual discs with a backing volume set to the damaged drive/partition. This means that virtual disc reads come from the backing volume, but any writes are made to the virtual disc rather than the backing volume. This means that we don't need huge quantities of storage to back up the data, and if we don't like the changes that were made by "repair" or other tools we can easily reset by deleting the virtual discs or by using qemu's snapshot feature.

This copy-on-write technique can be used not just with btrfs and "btrfs check --repair" but with other filesystems and other tools to repair those filesystems. For example, were you to edit some of the filesystem structure with a programme you'd written yourself, as I talk about in another post.

A complication with btrfs filesystems is that a device id is stored inside volumes of a filesystem. When mounting/checking btrfs will scan all discs and partitions to find devices, and mount them if the device ids match, so you do not want both the original disc and the virtual disc to be visible. You do this inside a virtual machine simply by ensuring only the virtual discs are mapped into the virtual machine. That is, don't map in the original file systems as raw discs!

Setting up a kvm virtual machine

You'll need a virtual machine to mount these virtual filesystems. If you don't already have a virtual machine set up, you'll need to create one. As we're using qemu virtual discs, this means you need to use the kvm/qemu/libvirtd hypervisor stack. There are several ways to set up a virtual machine and many good guides on the web about how to do this, so I won't repeat them here but I'll just say that on my Ubuntu system I used uvtool to quickly set up the VM to test my filesystem changes.

Setting up copy-on-write virtual discs

The qemu-img command creates these virtual filesystems. If a btrfs filesystem comprises multiple discs or partitions, we need to create a separate virtual disc for each physical disc/partition.

The following command creates a virtual disc that uses /dev/sdd2 as its backing file, and any changes to this virtual disc are written to the file ssd2_cow.qcow2.

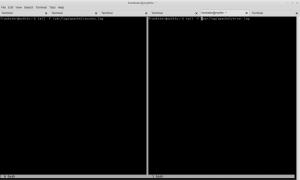

qemu-img create -f qcow2 -o backing_file=/dev/sdd2 -o backing_fmt=raw sdd2_cow.qcow2I ran similar comands once for each underlying disc/partition that was part of the btrfs filesystem.

To attach one of these discs disc to an existing VM the basic command is:

virsh attach-disk <VM NAME> /home/user/sdd2_cow.qcow2 vde --config --subdriver qcow2However on ubuntu, I ran into some (reasonable) restrictions imposed by apparmor. I would receive an error attempting to attach the virtual discs, with an error from apparmor showing up in the logs:

[56880.068334] audit: type=1400 audit(1673655958.288:119): apparmor="DENIED" operation="open" profile="libvirt-c712b749-0f68-413f-9bd7-76ea061808eb" name="/dev/sde2" pid=58733 comm="qemu-system-x86" requested_mask="r" denied_mask="r" fsuid=64055 ouid=64055The problem is that the hypervisor is by default prevented from accessing the host's raw discs. When the hypervisor attempts to read the backing file (or later, to lock it) apparmor prevents this. We solve this by adding lines to /etc/apparmor.d/local/abstractions/libvirt-qemu for each raw disc or partition that backs one of our virtual discs.

/dev/sde2 rk,This line allows libvirt/qemu to open the raw disc for read access (r), and allows the raw disc to be locked (k). We do not grant write access to libvirt/qemu so this provides an extra line of defence against unintended changes to our original filesystems.

Once apparmor was configured, I could attach these discs to my virtual machine, and access the virtual devices at locations such as /dev/vde.

Final thoughts

With this or similar techniques you can safely test fixes to damaged filesystems without risking the underlying data. Once you're happy that your fix will have the desired effect, you can apply it to the real filesystem.